Nick 11

Member

- Joined

- Mar 29, 2020

- Messages

- 8

- Reaction score

- 2

Hello

User-agent: *

Crawl-Delay: 20

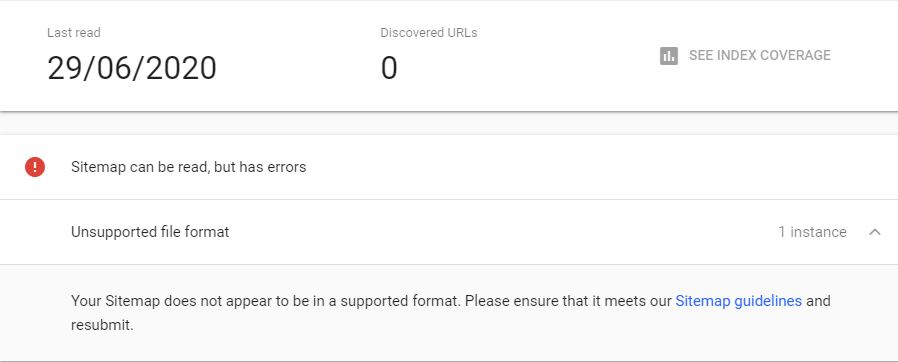

Rankings have dropped, checked /robots.txt and the above is what I get, also a screenshot of Google Search Console attached as an error message.

Any advice appreciated I have been onto my web host chat help who say contact a techie.

Thanks

User-agent: *

Crawl-Delay: 20

Rankings have dropped, checked /robots.txt and the above is what I get, also a screenshot of Google Search Console attached as an error message.

Any advice appreciated I have been onto my web host chat help who say contact a techie.

Thanks