- Joined

- Apr 6, 2016

- Messages

- 238

- Reaction score

- 282

Working with @Amy Toman we've found a few images that might cause a GMB post to be filtered.

Here's a quick discussion on Twitter we had today which may help explain the issue.

Amy's Tweet about rejected images in GMB Posts

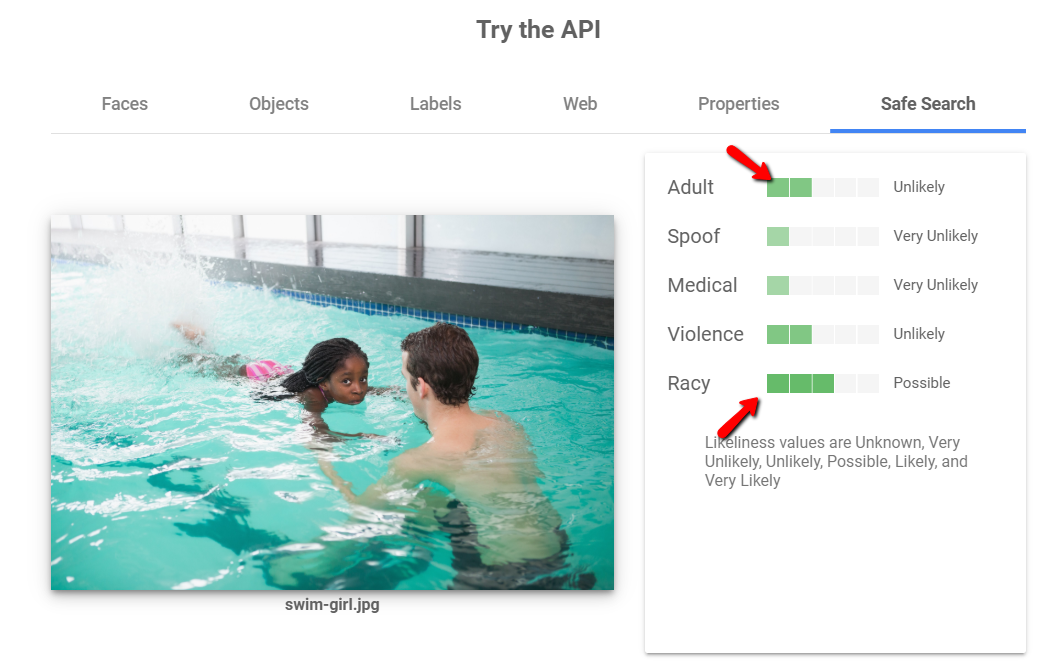

I then suggested using Google's Vision AI tool which may help you decide if your image is "Racy" or not.

My response and findings after using Google's Vision AI

So, if in doubt "Try the API", it's probably best to have "very unlikely" indicators for your images you use in GMB Posts.

Here's a quick discussion on Twitter we had today which may help explain the issue.

Amy's Tweet about rejected images in GMB Posts

I then suggested using Google's Vision AI tool which may help you decide if your image is "Racy" or not.

My response and findings after using Google's Vision AI

So, if in doubt "Try the API", it's probably best to have "very unlikely" indicators for your images you use in GMB Posts.